If you work as an SEO consultant, one of your missions is to understand how the market evolves in order to adapt your strategy. Why? Because it is easier to get results if we get there first and we must always adapt to the changing behavior of our customers.

There are several tools to help us in this task, however we tend to overlook the most obvious one: Google Suggestions.

What is Google Suggest?

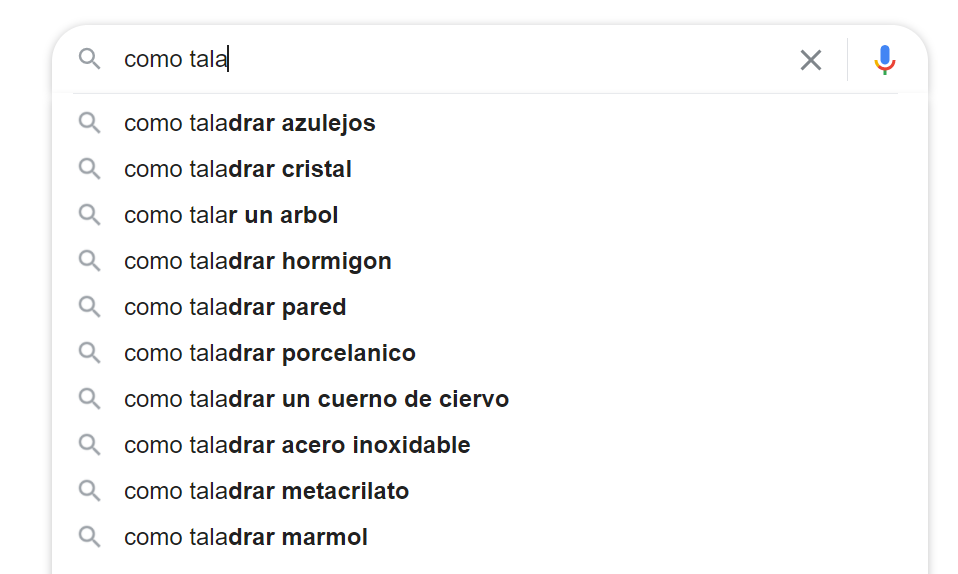

Everyone is using Google Suggest, most without even knowing it. When you start searching for something on Google, suggestions usually appear based on:

- The version of Google you use

- Your language

- Your search history

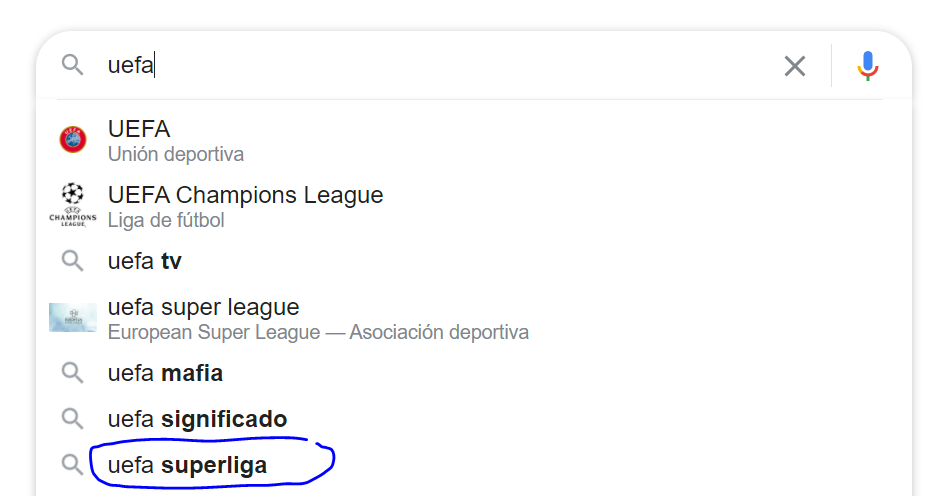

Although no tool will indicate an interesting search volume for this search, I know it will have plenty over the next few days. So does Google, which is why it suggests it to me.

It is interesting, but extracting data from this tool manually can be tedious. It’s true, however there is an API that allows us to speed up the process a bit. The best part? You can scrape without complex configuration.

TL;DR

If you are not interested in knowing how to perform this extraction and you are only interested in the result, you can access directly to the Google Colab I created and follow the instructions.

If you are interested in the details of the code (made in Python), you can continue reading.

Explanation of the code

The code is quite simple, but I am going to explain each of the steps so that you can understand it and adapt it if you wish.

Loading libraries

First, we have to load the different libraries. Some of them are quite well known (Pandas, Requests, JSON and BeautifulSoup) and you can find tutorials on the internet without any problem. The only not so common functionality I use is the ascii_lowercase function of the string library, which allows me to easily create a list with the different letters of the alphabet.

Why do I need it? You will understand this in one of the following sections.

#load libraries

import pandas as pd

import requests

from bs4 import BeautifulSoup

from string import ascii_lowercase

import json Enter the seed keyword and country

The Google Suggest API works only if you indicate a word, so that it returns suggestions. Therefore, you need to indicate one. In the Google Colab (which I shared with you at the beginning of the article), you will see that you can, as a user and without modifying the code, indicate the word you want.

This is possible thanks to the #@param {type: “string”} that I include at the end of each of the lines. It tells Google Colab that the user can modify the value of this variable. You can read more about this functionality here.

#Get input from users

seed_kw = "como taladrar" #@param {type:"string"}

country = "es" #@param {type:"string"}Extract suggestions

This part allows you to extract suggestions using the Google Suggest API.

What does it do exactly?

- It creates a list of keywords, adding the letters of the alphabet after your initial word. If you enter shoes, you will get shoes a, shoes b etc…

- Add to this same list, and following the same logic, your keyword followed by the numbers from 0 to 9.

- Extract the keywords returned by the Google Suggest API for each of these combinations.

Why do I follow this process? Simply because the Google Suggest API returns at most 10 suggestions. And if we add letters / number after your initial word, it allows to get more suggestions in a single run.

#convert seed keyword to list

keywords = [seed_kw]

#create aditionnal seeed by appending a-z & 0-9 to it

for c in ascii_lowercase:

keywords.append(seed_kw+' '+c)

for i in range(0,10):

keywords.append(seed_kw+' '+str(i))

#gett all suggestions from Google

sugg_all = []

i=1

for kw in keywords:

r = requests.get('http://suggestqueries.google.com/complete/search?output=toolbar&hl={}&q={}'.format(country,kw))

soup = BeautifulSoup(r.content, 'html.parser')

sugg = [sugg['data'] for sugg in soup.find_all('suggestion')]

sugg_all.extend(sugg)Extracting search volumes

At the beginning of this article, I mentioned that search volumes are not always useful. It is true, but sometimes it can be interesting to have this information. In the end, you can also extract data from Google Suggest to develop the architecture of an E-commerce.

There are many APIs that allow you to extract this data, but for this tutorial I have decided to use the one proposed by Keyword Surfer:

- It is free

- It does not require a password (create an account)

- It does not have many limitations

- It allows to extract volumes for up to 50 words in a single request

Now, it’s not perfect, especially for words with a search volume lower than 50 monthly searches, but you can replace it with another API if you want. This part of the code extracts the search volume for each of the suggestions returned by the Google Suggest API, for the country you indicated in step 2.

#remove duplicated

sugg_all = pd.Series(sugg_all).drop_duplicates(keep='first')

if len(sugg_all)==0:

print('There are no suggestion. The script can\'t work :(')

#get search volume data

#prepare keywords for encoding

data = sugg_all.str.replace(' ','%20').unique()

#divide kws into chunks of kws

chunks = [data[x:x+25] for x in range(0, len(data), 25)]

#create dataframe to receive data from API

results = pd.DataFrame(columns=['keyword','volume'])

#get data

for chunk in chunks:

url = (

'https://db2.keywordsur.fr/keyword_surfer_keywords?country={}&keywords=[%22'.format(country)+

'%22,%22'.join(chunk)+

'%22]'

)

r = requests.get(url)

data = json.loads(r.text)

for key in data.keys():

results.loc[len(results)] = [key,data[key]['search_volume']]Save the results

We are getting to the end! We only need to indicate when a word has no searches (by default, the Surfer API does not include words with a volume of 0) and save the result in a CSV that we can use later in Google Sheets or Excel.

Easy and fast!